White House proposes tech 'bill of rights' to limit AI harms

Top science advisers to President Joe Biden are calling for a new “bill of rights” to guard against powerful new artificial intelligence technology.

The White House’s Office of Science and Technology Policy on Friday launched a fact-finding mission to look at facial recognition and other biometric tools used to identify people or assess their emotional or mental states and character.

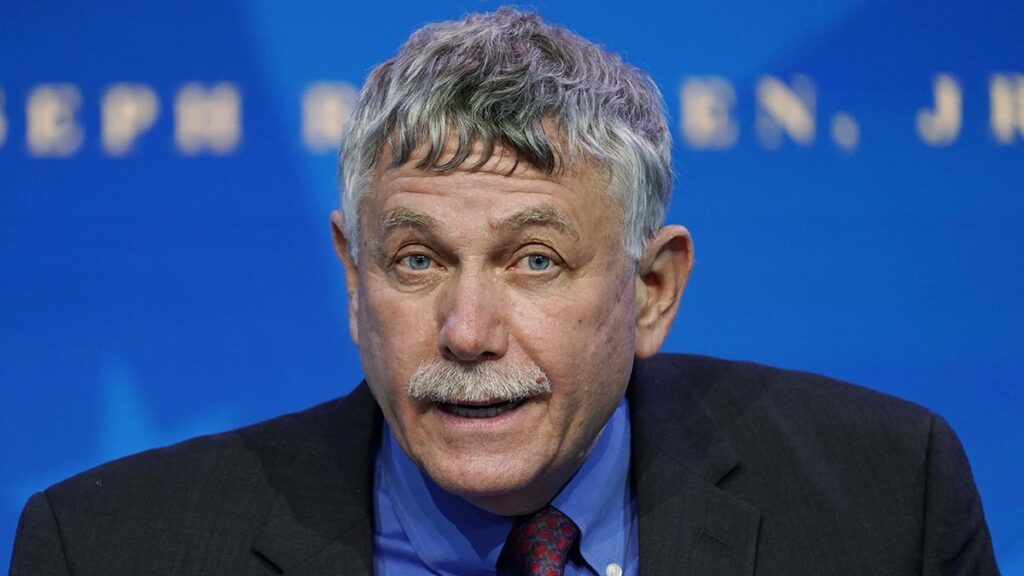

Biden’s chief science adviser, Eric Lander, and the deputy director for science and society, Alondra Nelson, also published an opinion piece in Wired magazine detailing the need to develop new safeguards against faulty and harmful uses of AI that can unfairly discriminate against people or violate their privacy.

“Enumerating the rights is just a first step,” they wrote. “What might we do to protect them? Possibilities include the federal government refusing to buy software or technology products that fail to respect these rights, requiring federal contractors to use technologies that adhere to this ‘bill of rights,’ or adopting new laws and regulations to fill gaps.”

This is not the first time the Biden administration has voiced concerns about harmful uses of AI, but it’s one of its clearest steps toward doing something about it.

European regulators have already taken measures to rein in the riskiest AI applications that could threaten people’s safety or rights. European Parliament lawmakers took a step this week in favor of banning biometric mass surveillance, though none of the bloc’s nations are bound to Tuesday’s vote that called for new rules blocking law enforcement from scanning facial features in public spaces.

Political leaders in Western democracies have said they want to balance a desire to tap into AI’s economic and societal potential while addressing growing concerns about the reliability of tools that can track and profile individuals and make recommendations about who gets access to jobs, loans and educational opportunities.

A federal document filed Friday seeks public comments from AI developers, experts and anyone who has been affected by biometric data collection.

The software trade association BSA, backed by companies such as Microsoft, IBM, Oracle and Salesforce, said it welcomed the White House’s attention to combating AI bias but is pushing for an approach that would require companies to do their own assessment of the risks of their AI applications and then show how they will mitigate those risks.

“It enables the good that everybody sees in AI but minimizes the risk that it’s going to lead to discrimination and perpetuate bias,” said Aaron Cooper, the group’s vice president of global policy.